Okay, we need to talk about this.

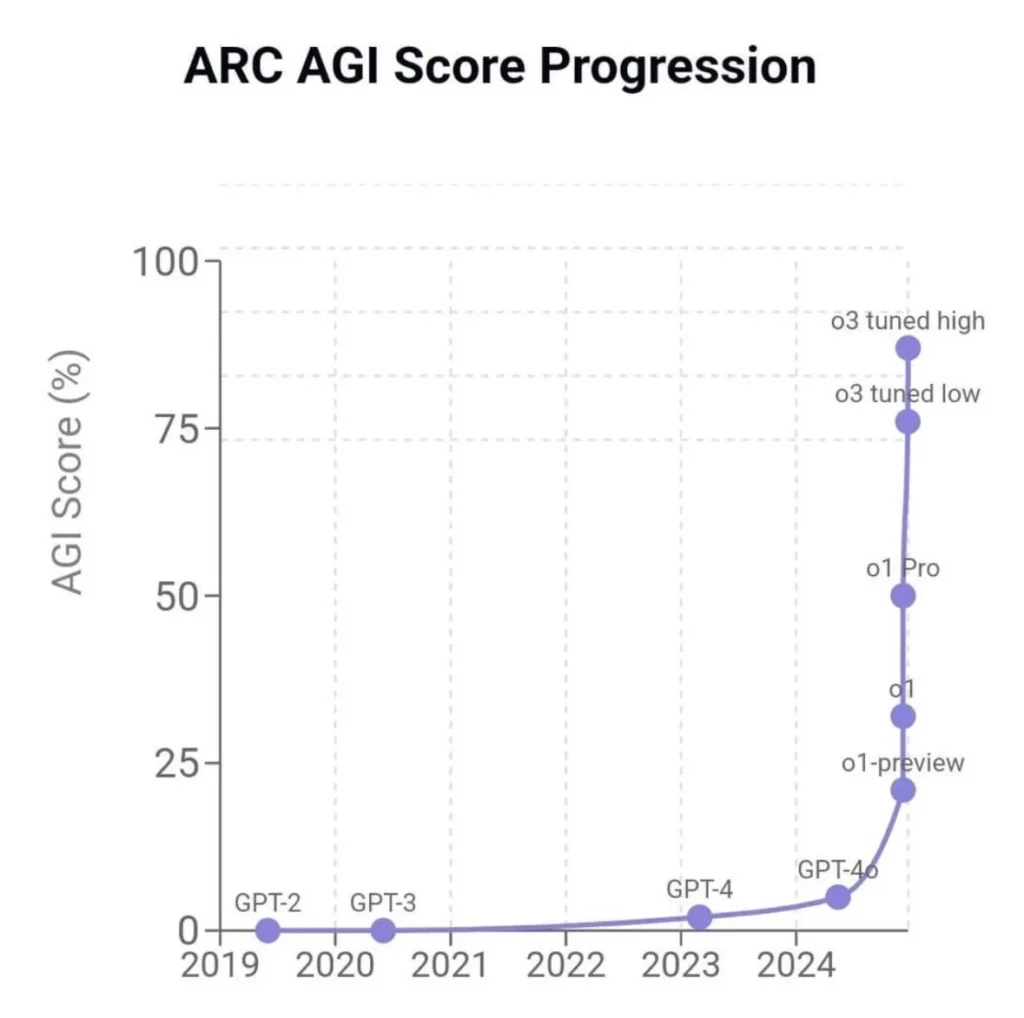

Last week, OpenAI announced o3—their new model—and tech Twitter went absolutely insane. And if you’re unable to make sense of this, it can lead to a lot of fear about the role humans play in the future.

What’s so special about o3? Well, it scored with 87.5% accuracy on the ARC-AGI benchmark, which is a significant improvement from previous models.

Without the model being out yet, some people say we’ve reached AGI and that jobs won’t exist within 5 years. Others, Elon included, are saying that money will be meaningless in the future. Unless we collect the right perspective, everything we’ve known and everything we are doing to better ourselves and our businesses may be for nothing.

The main questions I’m interested in are:

- Will we be considered insects to our AGI overlords?

- If AGI can do everything humans can and more, what the f*** do we do?

- If jobs won’t exist, what do we focus on if we want to thrive?

I’ve accumulated quite a few thoughts on this throughout my years of study. In this letter, I’ve compiled a few of the most compelling cases for human uniqueness.

I hope I can give you a positive way to make sense of the world and orient your behavior.

Buckle in, my friends. This is the greatest time to be alive.

By the way, much of this is condensed from my next book, Purpose & Profit, launching in February. It will be free (the digital version, at least).

AI vs AGI – A Critical Difference

To understand what AI is and what it means for us, we need to start at the origin of that term. Before AI, there was cybernetics, an idea laid out by Norbert Wiener in 1948.

Cybernetics—ancient Greek for “helmsman” or another word for “governor”—is the idea of automatic, self-regulating control in a system. Acting, sensing, and comparing to a goal is a fundamental loop to intelligent systems. His key insight was that the world should be understood in terms of information. That complex systems like organisms, brains, and societies error-correct toward a goal, and if these feedback loops break down, the system breaks down. Entropy.

Think of a ship approaching a lighthouse at night using constant feedback. The captain sees they’re drifting left of the light, steers right, then adjusts again when they’ve gone too far right.

Two years after Wiener’s introduction to cybernetics, he published The Human Use of Human Beings. Now out of print, the central idea relevant to today’s world is that “We must cease to kiss the whip that lashes us.” Wiener knew the danger was not in machines becoming more like humans but humans being treated like machines.

This is what AI is. A cybernetic system that needs a governor. What we know as AI today, mostly chat apps that we use to find information a tiny bit faster than with a Google search, lacks one crucial trait: agency.

AI is a specialist that needs a generalist. A tool that needs a master. AI is useful for achieving a goal it is assigned, like playing chess or beating Go. As long as AI must be tested—or assigned a goal—to determine its intelligence, it is not even close to human intelligence.

Kortex AI will be going out late January and you’ll see what I mean. It can write extremely well if you have a second brain of writing to reference, or you are good at telling it what to do (we solve a lot of this for you). Mobile, desktop, offline, and light mode are also going in throughout January 🙂

But that’s the exact problem. Most people have been trained to be specialist tools, not innovative humans in control of their journey in the unknown. It’s no wonder they’re scared of replacement. They should be. Since the day they were born, they’ve been assigned goals and have error-corrected to fit the mold their parents wanted them to fit in. Go to school, get a job, retire at 65. Entrepreneurship? Yeah, right. Save your money, get good grades, and listen to authority. Take the safe route. Stay subservient to the dominant system. And before you know it, your mind will scratch and claw its way back to the life that was sold to be comfortable. You may have the occasional desire to change—the depth of your soul crying for you to break free—but the code in your head is so powerful that it quickly squashes that feature mistaken for a bug. Do you see why I talk about this in almost every letter? It may sound like I’m beating a dead horse, but specialist conditioning is arguably the destroyer of humanity because it is the destroyer of creativity. What’s the solution? We’ll get to that.

Back to the story. Around the 1960s, a new perception of technology emerged that by inventing computers, we had externalized our central nervous system—our minds—and that we all now shared one singular mind. One infinite intelligence. All potential information at our fingertips.

Unfortunately, we don’t hear much about cybernetics today.

Why? Because this new perception fueled poor incentives. Norbert Wiener’s warnings about intelligent machines ran counter to the aspirations of his colleagues, who were interested in the commercialization of new technologies. They wanted to profit from this. Second, John McCarthy, a computer pioneer, disliked Wiener. He refused to use the term “cybernetics” and instead coined “artificial intelligence,” becoming a founding father in that field.

With the meteoric rise in discussion around intelligent machines, we’re left wondering what makes humans special or if we were even special to begin with. For being the only species that’s made it to the moon, there has to be something there, right?

AGI vs Humans

David Deutsch, influenced by Karl Popper, believes there is something significant about humans, and it lies in our ability to create infinite knowledge.

It starts with the need for creativity. The process by which all knowledge that is created happens through conjecture and criticism. Trial and error. Variation and selection (in Darwinian terms).

In other words, guessing and correcting one’s guess is how you accomplish anything you set your mind to. Psycho-cybernetics. This is how we learn, innovate, make progress, and understand almost anything in the universe. The difference is that humans can set their minds on anything, not just a goal it was assigned. It can discover new goals that shape the perception of opportunities, allowing our mind to error-correct toward that goal.

Deutsch believes that humans are “universal explainers.” That we are capable of understanding anything that is understandable within the laws of nature.

We create explanatory theories that reveal the deep structure of reality, allowing us to guess and predict in a more efficient way that breeds faster progress with time.

This knowledge allows us to understand things we’ve never directly experienced, like stars and galaxies. We can understand a rocket even if we’ve never built one. And if we can understand it, we can eventually build it if we have the knowledge to do so. There is a logical sequence of steps, and each step requires you to have the knowledge for that step. In your practical life, you can’t achieve something for the simple reason that you either don’t understand it or don’t have the knowledge to achieve it. For high-agency humans, this is liberating. For low-agency machines, this is blasphemy.

This is what many get wrong about AGI.

AI, or artificial intelligence, is an incomplete system that must be assigned a goal, like many animals or low-agency employees are programmed to.

AGI, or artificial general intelligence, is a complete or universal system. Like a human who is not limited to a small subset of things that are possible. AGI may have more computational power or memory, but there’s no concept that it can understand that we can’t ourselves, and that doesn’t rule out the fact we can use universal computers or augment ourselves with more computation and memory.

The point is that you can achieve anything within the realm of possibility, but only if you have the knowledge to do so.

You are not doomed to the default path of society or the rule of AGI.

The 5 Human Capabilities – Can AGI Surpass Them?

The thing about AGI is that people can’t seem to settle on a definition for it.

Some people see it as a tool that will automate and run their business hands-off. Some think it will be just like us, a person. Some think it will be a superintelligence that completely surpasses human capabilities (they often label this ASI).

The last one is the most frightening because people’s minds hop, skip, and jump to conclusions that we may be rendered irrelevant. When we feel stressed, our mind narrows and we can’t think straight. We fail to understand.

The questions here are:

- Are human capabilities actually limited?

- Do we not have the capability to learn and do anything that AGI could do?

- Ultimately, are there any limits on what we can think and how we think?

It’s fun to speculate about these things, and we can do that all day instead of focusing on what matters, so let’s break these capabilities down.

Mainly, we need to pay attention to computation, transformation, variation, selection, and attention as noted by AGI researcher Carlos De la Guardia (also influenced by Deutsch).

Computation

Is there any limit to what we can compute?

No, because once you have a universal computer in your hands, it’s just a matter of time and memory to compute anything.

If AGIs have that, they would have as much computational power as us, and therefore no advantage over us. Even further, if we can augment our brains (which I don’t see outside of the realm of possibility), we will remain on par with AGI as it accelerates.

Transformation

Transformation is creation. We turn raw materials into rockets given the right knowledge.

Human hands and bodies seem to be especially good at creating anything given a specific sequence of operations. We’ve built spaceships and telescopes. Meaning that we can build the thing that builds the thing. We are generalists that build tools to thrive in any environment. We are not animals bound to one niche.

The question is:

Is there a limit to what these basic operations can do when strung together in the right way?

Again, the answer is no. If humans could teleoperate a gorilla, there is a sequence of steps it can take to build a rocket given time. And no, I’m not saying a single gorilla. Imagine if Elon were operating the gorilla. What would he do?

The thing here is time. Transformation takes time, and a singularity won’t change that just as the Enlightenment or the Big Bang didn’t. Time is a compression algorithm that prevents everything from happening at once, and the Enlightenment and Big Bang clearly didn’t put rockets in the sky.

So far, the AGI worry seems to stem from a fundamental misunderstanding of reality itself.

How To Create Knowledge

After computation and transformation, there is variation, selection, and attention, which have to do with navigating idea space (or the unknown). We can compute and transform, but do we have limits on the knowledge that allows us to do so?

Knowledge serves two functions.

The first is to make specific things happen, preferably good things rather than bad. The second is to capture patterns in reality. This allows us to store information in an efficient way so that we aren’t always starting from scratch in our pursuits. We understand big-picture concepts like the sun rising and falling each day and seasons changing every so often.

Without this understanding, much of our lives would fall apart. Capturing patterns allows us to plan by proximity. We understand that we would freeze to death in a cold environment, so we use deposits of knowledge like a jacket and hotel to keep us warm while we travel.

Think of idea space—or the unknown—as a universal map with light and dark spots. The light spots are areas you’ve explored. The dark spots are where your potential lies.

This map is a surface area for ideas that can be discovered and tested against reality to verify their validity. When those results do not move you closer toward your goal, or move you further from that, a problem is revealed, and you must error correct toward the goal.

Variation

Is there a limit to the number of new ideas we can come up with to survive and achieve what we set our minds to?

With computation, we can navigate the entire space of ideas. With agency, we can take any step within that space and eventually stumble across a good idea (after many bad ones). With creation, we can move in unique ways, like flying over a forest rather than walking through it.

So, we can understand anything, create anything, and discover an infinite set of new ideas to solve an infinite string of problems. Again, AGI can do the same. We are both bound by the laws of nature, but any possibility inside of that is within reach.

Selection

We can come up with any idea, but can we find the good ones?

The potential problem here is that it is difficult to make cumulative progress without learning from mistakes. It wouldn’t be fun to start over from scratch if we wanted to build an electric car after a gas car. We wouldn’t be very developed as a species.

As universal cybernetic systems, we can become more efficient at navigating idea space to avoid wandering lost. We error correct. No fundamental difference here either.

Attention

One other aspect that humans take for granted is our ability to change our focus by changing our perspective.

When a problem occurs, where does your attention go? If you want to build a rocket, does it help to ask the old Gods to do it for you? Or can you change lenses to view the situation in a way that allows you to perceive opportunities?

While this is a massive problem for humans—paradigm lock and attaching to ideology—we do have the capability to change where our attention goes when problems come up. We can put on a spiritual lens to find peace and a scientific lens to find progress.

Identifying with a purely ascending and “spiritual” philosophy is no different from being an incomplete system that will fail to solve certain sets of problems. Spirituality is a great lens or tool, but a bad master, and not the end all be all.

Now, since AGI does not seem like it can surpass us in any way unless it bends what is possible (we would have a very different problem on our hands at that point), what do we do?

This doesn’t help the fact that life as we know it will change, jobs will be replaced, and the unknown creeps nearer.

In my opinion, the only option is what it has been:

To dive in.

The Answer Has Been & Will Be To Become A Creator

Be a creator and you won’t have to worry about jobs, careers, and AI. – Naval Ravikant

As I’ve discussed in 80% of my letters that Naval so eloquently put here, the answer is to become a creator. Specifically, a value creator.

When I say this, people assume I mean a myopic little “content creator.” Someone who posts content without realizing the depth behind it.

Let’s string this together to help it click:

The word “entrepreneur” is of French origin. It comes from the verb “entreprendre,” which means “to undertake” or “to do something.” In the 16th century when translated to English, it became a noun referring to a person who undertakes a business project.

In other words, an entrepreneur is someone who is doing something. It is not a title or label but an act. It is the commitment to a high-agency life. A commitment to doing things without permission from someone else. To set your own goals and navigate the unknown to achieve them.

And in lies the problem. People want to be told what to do when that’s exactly what will get them replaced. That doesn’t mean to stop learning from other people, it means to not treat any form of job, skill, or career as an end.

In this sense, a “creator” is someone who is creating something. It’s not a title or fancy new kind of job where you can sit in front of a camera and do minimal work. It is a way of being.

It just so happens that the highest leverage place to create—right now, at least—is on the internet. It is the path of high agency. You don’t need permission to create something and post it on the internet. You don’t need permission to navigate idea space and find the information you need. This may change in the future, but that only reinforces the point. No matter if it’s the internet or intergalactic space, the answer has been and always will be to become a creator.

Of course, and as we’ve learned, that doesn’t mean it’s easy. That doesn’t mean you can skip trial and error. That doesn’t mean that one course will give you all the answers because that’s not how the mind works. That’s not how time works. That’s not how AGI will work when we’re exposed to an entirely new set of problems once ones like jobs are solved.

You can go through something like 2 Hour Writer or the One-Person Business Launchpad, and that helps give you direction to take the first few steps into the unknown, but most people quit because they fail to realize that you still need to error-correct.

That’s what creators do. They solve the infinite set of problems that life presents. Without problems, there is no creativity. Without problems, there is no purpose. Pain and suffering stem from the inability to understand problems and, more so, relinquishing your ability to solve them. A world without creativity and purpose is a world without life.

The mark of a sovereign individual is that they learn how to learn:

- They have an evolving vision for the future

- They build a meaningful project as one stepping stone

- They identify problems that prevent progress

- They generate ideas and test solutions

- They become more efficient with time

- They deposit their creation and knowledge

- If valuable, they are rewarded by the monetary system of that society

- If not valuable, they error correct until valuable

- And lastly, they never, ever quit because someone else’s vision trumps their own.

Do it all. Write. Design. Market. Sell. Film. Code. Be the generalist you were born to be. Be the orchestrator of ideas. The governor of thought. AI is simply a tool that now allows you to learn and do all of these. Once it becomes your master, you lose.

Nobody can tell you what to do in the future. But has it ever been any different? We’re just talking in circles at this point. You’re looking for a quick fix as always and you know that the longest path is the quickest fix. The principles haven’t changed, you just haven’t taken the leap.

Thank you for reading.

I hope it helped.

– Dan

P.S. We didn’t have the time to talk about jobs. Their importance, what’s going to be replaced, and the rest.

We can talk about that later.

For now, it could do some good to contemplate all potential outcomes of jobs going extinct in place of everyone being able to pursue their life’s work.

They’ve always been able to, but this may be a forcing function for those who have been putting it off.